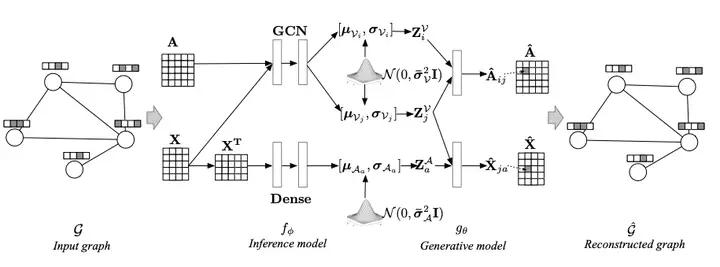

The architecture of our proposed CAN model

The architecture of our proposed CAN modelAbstract

Existing embedding methods for attributed networks aim at learning low-dimensional vector representations for nodes only but not for both nodes and attributes, resulting in the fact that they cannot capture the affinities between nodes and attributes. However, capturing such affinities is of great importance to the success of many real-world attributed network applications, such as attribute inference and user profiling. Accordingly, in this paper, we introduce a Co-embedding model for Attributed Networks (CAN), which learns low-dimensional representations of both attributes and nodes in the same semantic space such that the affinities between them can be effectively captured and measured. To obtain high-quality embeddings, we propose a variational auto-encoder that embeds each node and attribute with means and variances of Gaussian distributions. Experimental results on real-world networks demonstrate that our model yields excellent performance in a number of applications compared with state-of-the-art techniques.

Supplementary notes can be added here, including code, math, and images.