GLA-SD - Towards General LLM-based Agents for Scientific Discovery.

GLA-SD - Towards General LLM-based Agents for Scientific Discovery.

Large Language Models (LLMs) have revolutionized numerous domains, with scientific discovery poised to experience a profound transformation. LLM-based agents, enhanced by capabilities such as planning, memory, reflection, and tool use, are designed to execute complex tasks autonomously. These agents are already demonstrating their potential by solving advanced problems in fields like mathematics, gaming, software development, biomedicine, and material science. With their ability to process vast amounts of data, generate hypotheses, and assist in decision-making, LLM-based agents are increasingly seen as essential collaborators in accelerating scientific research. The potential of these agents to contribute meaningfully to automated scientific discovery is vast, opening up possibilities for breakthroughs in how knowledge is generated and applied across disciplines.

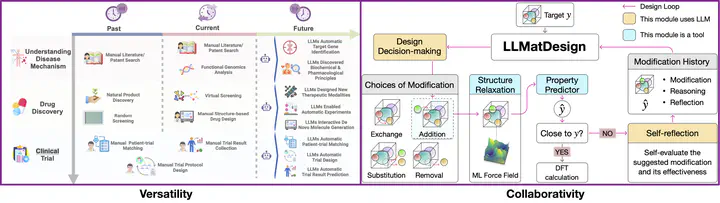

Despite the remarkable advancements in Artificial Intelligence (AI), the application of LLM-based agents for automated scientific discovery still faces significant technical and societal challenges, particularly in terms of versatility, interpretability, collaborativity, and trustworthiness. Major technology leaders such as OpenAI, Microsoft, Apple, ByteDance, and LangChain are investing heavily in AI agent frameworks, aiming to shape the future of AI agent research and application. However, existing agents tend to specialize in narrow fields—excelling in single domains such as material science, quantum physics, or biomedicine—without the versatility for interdisciplinary tasks or the ability to stay up-to-date with rapidly evolving knowledge. Furthermore, interpretability is paramount. Scientists need AI agents that can be trusted to generate precise, interpretable outputs while offering flexible, dynamic reasoning ability to fit a range of needs, from brainstorming and hypothesis generation to decision-making. For AI agents to truly automate scientific discovery, they must seamlessly integrate knowledge across domains and continuously update themselves to reflect the latest advancements and generate interpretable output.

Currently, effective human-AI collaboration is still a grand challenge. While agents are increasingly capable, there is still no clear design framework to allow intelligible and responsive collaborations where researchers can guide, understand, refine, and work with them effectively. This lack of collaborativity hinders the full potential of AI in advancing scientific discovery workflows. Moreover, with the increasing power of AI in high-stakes fields, there is growing concern about its potential misuse. Unchecked, AI agents could be employed irresponsibly in ways that lead to flawed research, ethical violations, or even the proliferation of biased or harmful findings. Ensuring that AI systems are compliant with strict ethical, legal, and regulatory standards is crucial to preventing misuse and fostering responsible innovation.

GLA-SD aims to advance the technologies of LLM-based agents for automated scientific discovery by tackling challenges of versatility, interpretability, collaborativity, and trustworthiness. These agents will integrate domain-specific knowledge and advanced reasoning to generate precise, robust, and contextually accurate scientific insights, while prioritizing human-AI synergy, ethical responsibility, and legal compliance to ensure transparent, interpretable, and trustworthy AI-driven scientific discovery workflows.

Objectives

- Elevate Domain Expertise and Knowledge Fidelity: Develop LLM-based collaborative agents capable of comprehending, integrating, and editing domain-specific knowledge, while generating specialized and interdisciplinary scientific insights. These agents will ensure high levels of precision, robustness, and contextual faithfulness across rapidly evolving and multidisciplinary scientific fields.

- Advance Knowledge-Grounded Reasoning and Interpretability: Integrate Retrieval-Augmented Generation (RAG) and advanced structure knowledge reasoning methodologies (e.g., knowledge graph reasoning, multi-hop reasoning) into AI agents to enhance the reasoning capabilities of LLMs, ensuring outputs that are not only contextually sound but also knowledge-grounded, systematically interpretable, and transparent to human experts.

- Optimize Human-AI Synergy and Operational Collaborativity: Design adaptive, intuitive interaction frameworks that promote seamless collaboration between human scientists and AI agents, enabling dynamic refinement of outputs, continuous feedback integration, and the streamlining of complex scientific workflows.

- Ensure Compliance, Reliability, and Ethical Integrity in Scientific Discovery: Develop AI agents that meet the highest ethical, regulatory, and legal standards in scientific research, incorporating real-time impact assessment mechanisms to ensure transparency, reliability, and accountability. Establish automated auditing systems and collaborate with experts across disciplines to guide responsible AI use in high-stakes domains.

Work Packages and Methodology

WP1: Elevating Domain Expertise and Knowledge Fidelity [PDRA1, PhD1] (O1)

Goal: Develop LLM-based collaborative agents capable of comprehending, integrating, and editing domain-specific knowledge while generating specialized and interdisciplinary scientific insights.

Methodology:

- Develop LLM-based Agents for Domain-Specific Knowledge Integration: Focus on parameter-efficient fine-tuning, retrieval-augmented generation, and advanced in-context learning methodologies to allow LLMs to comprehend and integrate domain-specific knowledge dynamically.

- Enhance Precision and Faithfulness Across Interdisciplinary Fields: Build LLM agents to work collaboratively across multiple scientific domains, ensuring interdisciplinary outputs remain reliable, contextually relevant, and grounded in scientific expertise.

- Enable Multi-Agent Collaboration for Interdisciplinary Insights: Develop a framework where multiple LLM agents work together to generate and validate insights across various scientific domains.

- Evaluate Knowledge Fidelity and Interdisciplinary Utility: Use human-in-the-loop benchmarks to evaluate knowledge fidelity, relevance, and utility in specialized and interdisciplinary scenarios.

Outcomes: Novel methodologies in LLM knowledge integration, probing, editing, RAG, and evaluation; a multi-agent collaborative framework for producing interdisciplinary scientific insights; human-in-the-loop benchmarks to evaluate interdisciplinary utility and knowledge fidelity.

WP2: Advancing Knowledge-Grounded Reasoning and Interpretability [PDRA1, PhD2] (O2)

Goal: Integrate RAG and advanced reasoning techniques, such as knowledge graph reasoning and multi-hop reasoning, into LLM agents to enhance their reasoning capabilities.

Methodology:

- Implement Advanced Knowledge-Grounded Reasoning: Incorporate advanced reasoning techniques such as knowledge graph reasoning and multi-hop reasoning into the LLM agents.

- Integrate RAG for Dynamic Knowledge Retrieval: Seamlessly incorporate RAG into reasoning workflows to ensure dynamic access to the most up-to-date, verified data sources.

- Develop Frameworks for Interpretability and Transparency: Create frameworks that allow researchers to trace the reasoning pathways of LLMs, fostering human-AI collaboration.

- Evaluate Reasoning Capabilities Across Domains: Evaluate the reasoning abilities of LLMs through case studies across diverse scientific fields, leveraging LLM-as-judges and human experts.

Outcomes: LLM agents with enhanced reasoning capabilities, grounded in real-time data retrieval; transparent and interpretable reasoning processes; a benchmarking framework to evaluate reasoning and interpretability of LLM agents across domains.

WP3: Optimizing Human-AI Synergy and Operational Collaborativity [PhD3] (O3)

Goal: Design adaptive, intuitive interaction frameworks that promote seamless collaboration between human scientists and AI agents, enabling dynamic refinement of outputs, continuous feedback integration, and streamlining of complex scientific workflows.

Methodology:

- Develop Adaptive Interaction Frameworks: Design interaction frameworks that allow researchers to collaborate with AI agents using natural language inputs and provide flexible control over outputs.

- Prototype Real-Time Collaboration Tools: Develop collaboration tools that enable dynamic feedback loops between human researchers and AI agents.

- Streamline Scientific Workflows: Automate routine scientific workflows via agent planning and auto-workflows.

- Evaluate Interaction Frameworks in Real-World Scenarios: Test the interaction tools in real-world scientific settings, such as drug discovery.

Outcomes: Intuitive, adaptive interaction frameworks; prototypes for real-time collaboration in scientific workflows; improved operational collaborativity and streamlined workflows through automated task management.

WP4: Ensuring Compliance, Reliability, and Ethical Integrity [PDRA2] (O4)

Goal: Develop AI agents that adhere to the highest standards of regulatory, ethical, and legal frameworks governing scientific research, fostering trust through demonstrable reliability, transparency, and responsible decision-making across diverse scientific applications.

This research proposal was originally developed for the ERC Starting Grant. While not selected for funding, the research agenda remains active and ongoing. Here are some of the latest publications and developments related to this research direction.

- Zeng, R., Fang, J., Liu, S. and Meng, Z., 2024. On the Structural Memory of LLM Agents. arXiv preprint arXiv:2412.15266.

- Fang, J., Peng, Y., Zhang, X., Wang, Y., Yi, X., Zhang, G., … & Meng, Z. (2025). A Comprehensive Survey of Self-Evolving AI Agents: A New Paradigm Bridging Foundation Models and Lifelong Agentic Systems. arXiv preprint arXiv:2508.07407.

For the latest publications and developments related to this research direction, please visit my Google Scholar profile.